Brief Research Overview

Robot learning is a field that enables robots to acquire and

improve their capabilities through various learning techniques

and algorithms. At the SMART Lab, we push the boundaries of

robot intelligence through several interconnected research

directions. We develop advanced learning algorithms that

enhance robots' ability to perceive, reason about, and

interact with their environment. Another significant portion

of our research explores reinforcement learning approaches

that allow robots to learn optimal behaviors through

experience and interaction. We are particularly interested in

human-in-the-loop robot learning, including Learning from

Demonstration (LfD) and preference learning, which enables

robots to learn directly from human teachers and adapt to

human preferences. Recently, we have begun investigating how

generative AI technologies can revolutionize robot learning,

exploring ways to leverage large language models and other

generative AI systems to enhance robots' learning capabilities

and decision-making processes. This cutting-edge research aims

to create more adaptable and intelligent robotic systems that

can better understand and respond to human needs.

You can learn more about our current and past research on robot learning below.

Generative AI based Robot Reasoning and Learning (2022

- Present)

Description: Generative AI (GAI), particularly Large Language Models (LLMs) and Vision-Language Models (VLMs), represents a groundbreaking advancement in artificial intelligence. These foundation models, trained on vast datasets of knowledge and experience, exhibit remarkable general reasoning capabilities that can be harnessed for robotics applications. At the SMART Lab, we are at the forefront of integrating generative AI with robotic systems to enhance their cognitive and learning abilities. Our recent research explores multiple innovative applications: utilizing LLMs for intelligent task allocation and coordination in multi-robot and human-robot teams; developing LLM-powered approaches for improved robot semantic navigation and scene understanding; and investigating how crowdsourced LLMs can serve as synthetic teachers in robot learning scenarios, providing valuable feedback and guidance. By harnessing the power of generative AI, we aim to revolutionize the way robots reason, learn, and interact, paving the way for more capable and adaptable robotic systems.

Grants: NSF (IIS #1846221), NSF (DRL #2418688), Purdue University

People: Vishnunandan Venkatesh, Ruiqi Wang, Taehyeon Kim, Ziqin Yuan, Ikechukwu Obi, Arjun Gupte

Selected Publications:

- L. N. Vishnunandan Venkatesh and Byung-Cheol Min,

"ZeroCAP: Zero-Shot Multi-Robot Context Aware Pattern

Formation via Large Language Models", IEEE International

Conference on Robotics and Automation (ICRA), Atlanta, USA,

19-23 May, 2025. (Accepted) Paper

Link, Video

Link

- Ruiqi Wang*, Dezhong Zhao*, Ziqin Yuan, Ike Obi, and Byung-Cheol Min (* equal contribution), "PrefCLM: Enhancing Preference-based Reinforcement Learning with Crowdsourced Large Language Models", IEEE Robotics and Automation Letters, Vol. 10, No. 3, pp. 2486-2493, March 2025. Paper Link, Video Link

- Shyam Sundar Kannan*, L. N. Vishnunandan Venkatesh*, and Byung-Cheol Min (*equal contribution), "SMARTLLM: Smart Multi-Agent Robot Task Planning using Large Language Models", 2024 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS). Abu Dhabi, UAE, October 13-17, 2024. Paper Link, Video Link

- Taehyeon Kim and Byung-Cheol Min, "Semantic Layering in Room Segmentation via LLMs", 2024 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS). Abu Dhabi, UAE, October 13-17, 2024. Paper Link, Video Link

Preference-Based Reinforcement Learning (2021

- Present)

Description: Reinforcement Learning (RL)

traditionally requires precisely defined reward functions to

guide robot behavior, a challenging requirement in complex

human-robot interaction scenarios. Preference-based

Reinforcement Learning (PbRL) offers an innovative solution by

learning from human comparative feedback rather than

predefined rewards, making it more intuitive to teach robots

desired behaviors. At the SMART Lab, we are advancing PbRL

techniques to address key challenges in the field, such as the

need for efficient learning from minimal human feedback and

the complexity of modeling human preferences. Our research

focuses on developing algorithms that can better interpret

various forms of human feedback while requiring fewer

interactions. Through innovations like our feedback-efficient

active preference learning approach, we aim to make robot

learning more natural and practical for real-world

applications.

Grants: NSF (IIS #1846221), Purdue University

People: Ruiqi

Wang, Weizheng

Wang, Ziqin

Yuan, Ikechukwu

Obi

Selected Publications:

- Weizheng Wang, Chao Yu, Yu Wang, and Byung-Cheol Min, "Human-Robot Cooperative Distribution Coupling for Hamiltonian-Constrained Social Navigation", IEEE International Conference on Robotics and Automation (ICRA), Atlanta, USA, 19-23 May, 2025. (Accepted) Paper Link, Video Link, Website Link

- Ruiqi Wang*, Dezhong Zhao*, Dayoon Suh, Ziqin Yuan, Guohua

Chen, and Byung-Cheol Min (*equal contribution),

"Personalization in Human-Robot Interaction through

Preference-based Action Representation Learning", IEEE

International Conference on Robotics and Automation (ICRA),

Atlanta, USA, 19-23 May, 2025. (Accepted) Paper

Link, Video

Link, Website

Link

- Weizheng Wang, Ruiqi Wang, Le Mao, and Byung-Cheol Min, "NaviSTAR: Benchmarking Socially Aware Robot Navigation with Hybrid Spatio-Temporal Graph Transformer and Active Learning", 2023 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS 2023), Detroit, USA, October 1-5, 2023. Paper Link, Video Link, GitHub Link

- Ruiqi Wang, Weizheng Wang, and Byung-Cheol Min, "Feedback-efficient Active Preference Learning for Socially Aware Robot Navigation", 2022 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS 2022), Kyoto, Japan, October 23-27, 2022. Paper Link, Video Link, GitHub Link

Learning from Demonstration (2021 -

Present)

Description: Learning from Demonstration

(LfD) is a powerful paradigm that enables robots to learn new

skills by observing and replicating human demonstrations. This

approach bridges the gap between human expertise and robot

capabilities, making it more intuitive for non-experts to

teach robots complex behaviors. At the SMART Lab, we are

advancing the frontiers of LfD research, with a particular

focus on its application to Multi-Robot Systems (MRS). While

traditional LfD has primarily focused on single-robot

scenarios, we are pioneering methods to extend these

principles to multiple robots working in coordination. Our

innovative framework leverages visual demonstrations to

capture intricate robot-object interactions and complex

collaborative behaviors. By developing sophisticated

algorithms that can translate human demonstrations into

coordinated multi-robot actions, we aim to make robot teaching

more accessible and efficient.

Grants: NSF (IIS #1846221), Purdue University

People: Vishnunandan Venkatesh, Taehyeon Kim, Ruiqi Wang

Selected Publications:

- L. N. Vishnunandan Venkatesh and Byung-Cheol Min, "Learning from Demonstration Framework for Multi-Robot Systems Using Interaction Keypoints and Soft Actor-Critic Methods", 2024 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS). Abu Dhabi, UAE, October 13-17, 2024. Paper Link, Video Link

- Ruiqi Wang, Weizheng Wang, and Byung-Cheol Min, "Feedback-efficient Active Preference Learning for Socially Aware Robot Navigation", 2022 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS 2022), Kyoto, Japan, October 23-27, 2022. Paper Link, Video Link, GitHub Link

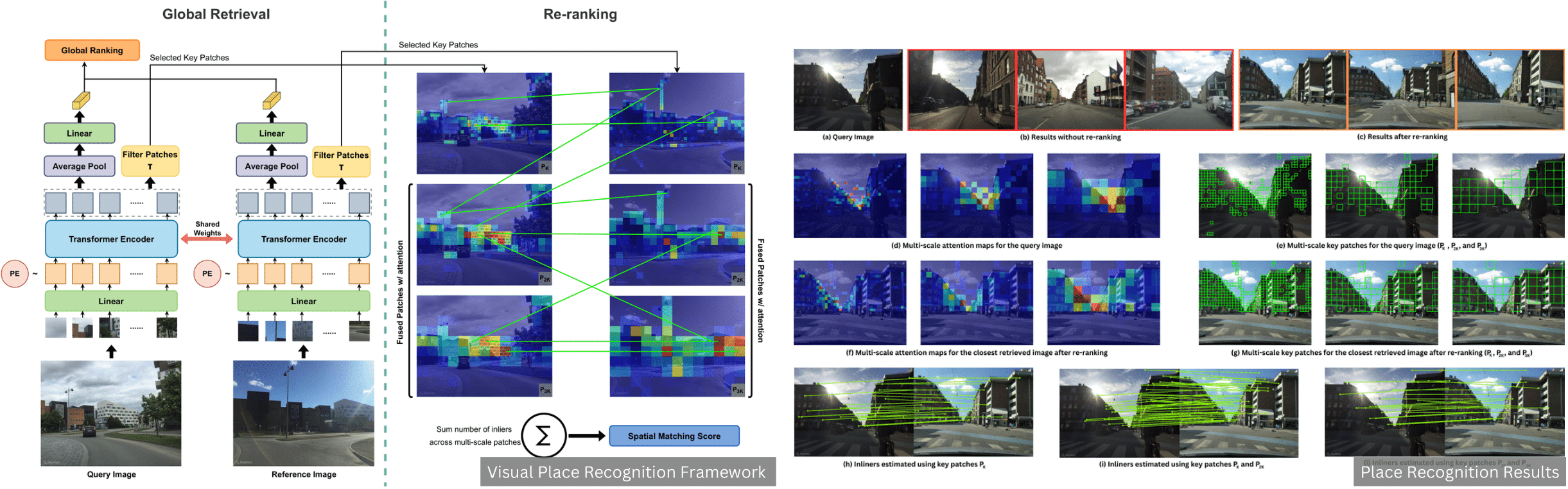

Visual Localization and Mapping (2022 -

Present)

Description: Visual localization enables

autonomous vehicles and robots to navigate based on visual

observations of their operating environment. In visual

localization, the agent estimates its pose based on the image

from the camera. The operating environment of the agent can

undergo various changes due to illumination, day and night,

seasons, structural changes, and so on. In vision-based

localization, it is important to adapt to these changes that

can significantly impact visual perception. The SMART lab

investigates into developing methods that enable autonomous

agents to robustly localize despite these changes in the

surroundings. For example, we developed a visual place

recognition system that aids the autonomous agent in

identifying its location on a large-scale map by retrieving a

reference image that matches closely with the query image from

the camera. The prposed method utilizes consice descriptors

from the image, so that the image process can be done rapidly

with less memory consumption.

Grants: Purdue University

People: Shyam Sundar Kannan, Vishnunandan Venkatesh

Selected Publications:

- Shyam Sundar Kannan and Byung-Cheol Min, "ZeroSCD:

Zero-Shot Street Scene Change Detection", IEEE International

Conference on Robotics and Automation (ICRA), Atlanta, USA,

19-23 May, 2025. (Accepted) Paper

Link

- Shyam Sundar Kannan and Byung-Cheol Min, "PlaceFormer:

Transformer-based Visual Place Recognition using Multi-Scale

Patch Selection and Fusion", IEEE Robotics and Automation

Letters, Vol. 9, No. 7, pp. 6552-6559, July 2024. Paper Link

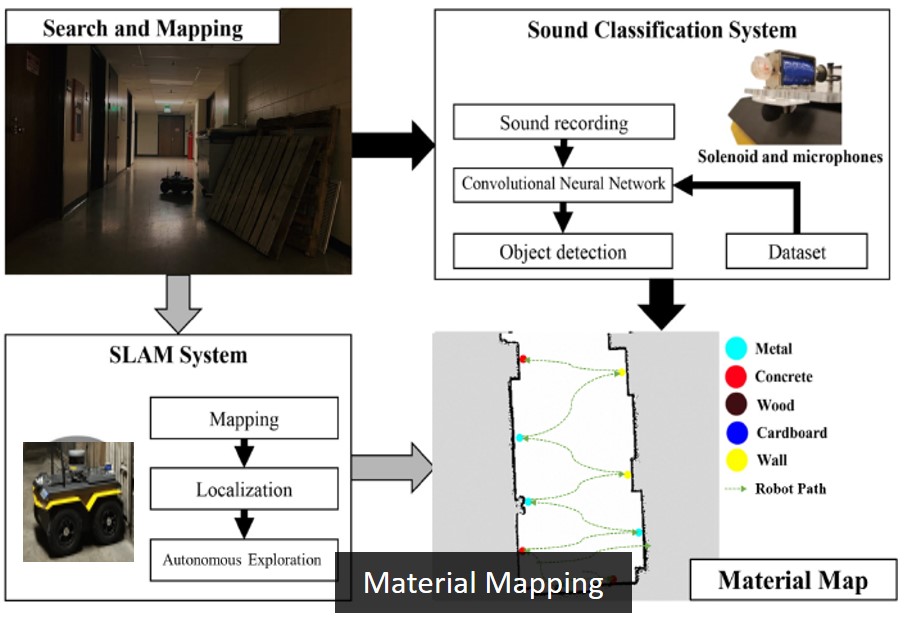

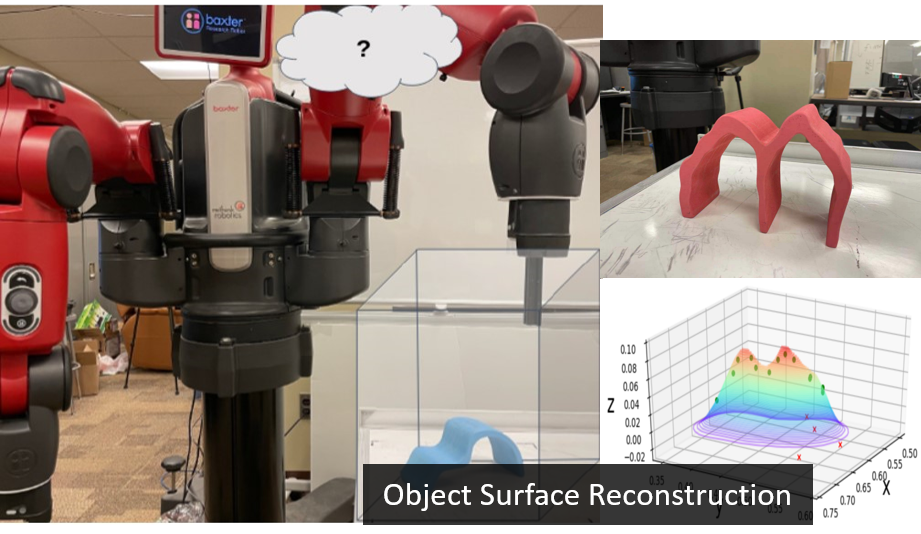

Learning-based Robot Recognition (2017 -

2022)

Description:The SMART Lab is researching

learning-based robot recognition technology to enable robots

to recognize and identify objects/scenes in real-time with

the same ease as humans, even in dynamic environments and

with limited information. We aim to apply our research and

developments to a variety of applications, including the

navigation of autonomous robots/cars in dynamic

environments, the detection of malware/cyberattacks, object

classification and reconstruction, the prediction of the

cognitive and affective states of humans, and the allocation

of workloads within human-robot teams. For example, we

developed a system in which a mobile robot autonomously

navigates an unknown environment through simultaneous

localization and mapping (SLAM) and uses a tapping mechanism

to identify objects and materials in the environment. The

robot taps an object with a linear solenoid and uses a

microphone to measure the resulting sound, allowing it to

identify the object and material. We used convolutional

neural networks (CNNs) to develop the associated

tapping-based material classification system.

Grants: Purdue University

People: Wonse

Jo, Shyam

Sundar Kannan, Go-Eum

Cha, Vishnunandan

Venkatesh, Ruiqi

Wang

Selected Publications:

- Su Sun and Byung-Cheol Min, "Active Tapping via Gaussian Process for Efficient Unknown Object Surface Reconstruction", 2021 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Workshop on RoboTac 2021: New Advances in Tactile Sensation, Interactive Perception, Control, and Learning. A Soft Robotic Perspective on Grasp, Manipulation, & HRI, Prague, Czech Republic, Sep 27 – Oct 1, 2021. Paper Link

- Shyam Sundar Kannan, Wonse Jo, Ramviyas Parasuraman, and Byung-Cheol Min, "Material Mapping in Unknown Environments using Tapping Sound", 2020 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS 2020), Las Vegas, NV, USA, 25-29 October, 2020. Paper Link, Video Link

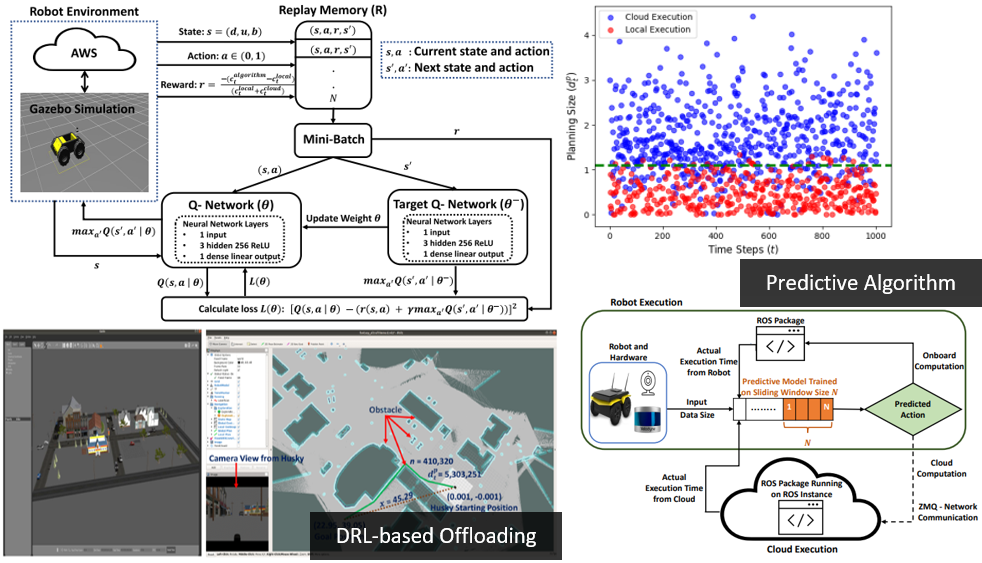

Application Offloading Problem (2018 -

2022)

Description: Robots come with a variety

of computing capabilities, and running

computationally-intensive applications on robots can be

challenging due to their limited onboard computing, storage,

and power capabilities. Cloud computing, on the other hand,

provides on-demand computing capabilities, making it a

potential solution for overcoming these resource

constraints. However, effectively offloading tasks requires

an application solution that does not underutilize the

robot's own computational capabilities and makes decisions

based on cost parameters such as latency and CPU

availability. In this research, we address the application

offloading problem: how to design an efficient offloading

framework and algorithm that optimally uses a robot's

limited onboard capabilities and quickly reaches a consensus

on when to offload without any prior knowledge of the

application. Recently, we developed a predictive algorithm

to predict the execution time of an application under both

cloud and onboard computation, based on the size of the

application's input data. This algorithm is designed for

online learning, meaning it can be trained after the

application has been initiated. In addition, we formulated

the offloading problem as a Markovian decision process and

developed a deep reinforcement learning-based Deep Q-network

(DQN) approach.

Grants: Purdue University

People: Manoj Penmetcha , Shyam Sundar Kannan

Selected Publications:

- Manoj Penmetcha and Byung-Cheol Min, "A Deep Reinforcement Learning-based Dynamic Computational Offloading Method for Cloud Robotics", IEEE Access, Vol. 9, pp. 60265-60279, 2021. Paper Link, Video Link

- Manoj Penmetcha, Shyam Sundar Kannan, and Byung-Cheol Min, "A Predictive Application Offloading Algorithm using Small Datasets for Cloud Robotics", 2021 IEEE International Conference on Systems, Man, and Cybernetics (SMC), Virtual, Melbourne, Australia, 17-20 October, 2021. Paper Link, Video Link