Brief Research Overview

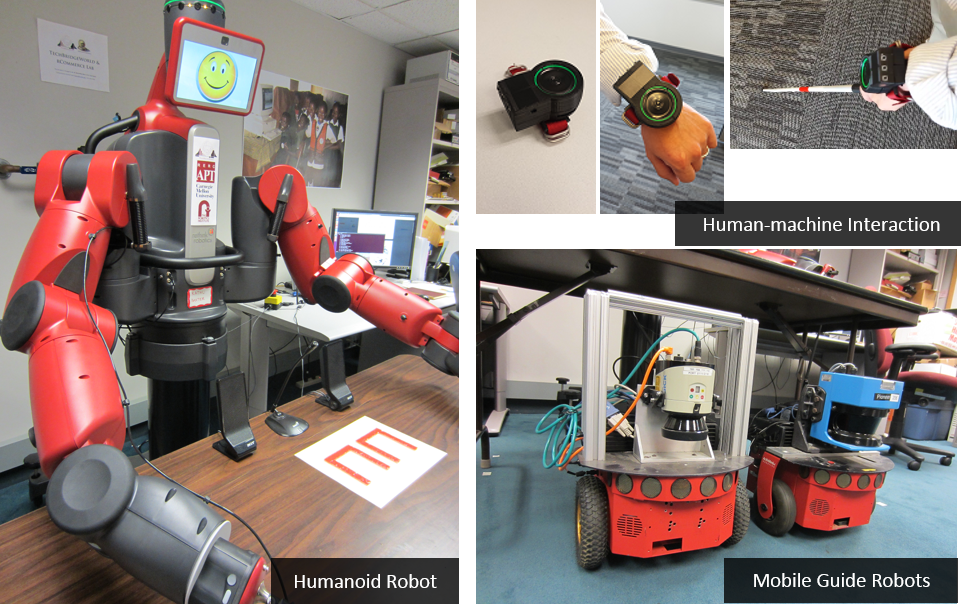

Human-Robot Interaction (HRI) investigates the design and

development of robotic systems that can interact naturally and

effectively with humans. As robots increasingly become

integral parts of our society, the importance of creating

intuitive and reliable human-robot interactions has never been

more critical. The SMART Lab's journey in HRI research began

with pioneering work on assistive robots for visually impaired

travelers when Dr. Min worked on the NSF/NRI project at

Carnegie Mellon University. Since then, our HRI research

portfolio has expanded to several cutting-edge areas,

including multi-human multi robot systems, human-multi

robot/swarm interaction, affective computing, socially-aware

robot navigation, human-machine interfaces, and assistive

technology and robotics.

You can learn more about our current and past research on human-robot interaction below.

Human Multi-robot Systems (2018 - Present)

Description: The emerging field of

human multi-robot systems and multi-human multi-robot

interaction explores how teams of humans and multiple robots

can effectively collaborate. This cutting-edge research area

has transformative potential for complex operations including

environmental exploration, surveillance, and disaster

response. Drawing on our extensive expertise in multi-robot

systems, swarm robotics, human-robot interaction, and

assistive technology, the SMART Lab is developing

groundbreaking solutions in this domain. Our current research

focuses on creating distributed algorithms that enable

seamless robot-to-robot collaboration, developing adaptive

interaction systems that allow robots to work efficient with

humans in diverse environments and scenarios, and building

practical applications that demonstrate the real-world

potential of human multi-robot systems. Our vision is to

democratize human-robot collaboration, making it accessible to

everyone: from novice users to those with disabilities. We are

working toward a future where humans and robot teams can

seamlessly partner on a wide range of practical tasks,

regardless of the users' technical expertise or the number of

robots involved.

Grant: NSF (IIS #1846221), NSF (CMMI #2222838), NSF (DRL #2418688)

People: Ruiqi

Wang, Vishnunandan

Venkatesh, Ikechukwu

Obi, Arjun

Gupte, Jeremy

Pan, Wonse

Jo, Go-Eum

Cha

Project Website: https://polytechnic.purdue.edu/ahmrs

Selected Publications:

- Ziqin Yuan*, Ruiqi Wang*, Taehyeon Kim, Dezhong Zhao, Ike

Obi, and Byung-Cheol Min (*equal contribution), "Adaptive

Task Allocation in Multi-Human Multi-Robot Teams under Team

Heterogeneity and Dynamic Information Uncertainty", IEEE

International Conference on Robotics and Automation (ICRA),

Atlanta, USA, 19-23 May, 2025. (Accepted) Paper

Link, Video

Link, Website

Link

- Wonse Jo, Ruiqi Wang, Baijian Yang, Dan Foti, Mo Rastgaar, and Byung-Cheol Min, "Cognitive Load-based Affective Workload Allocation for Multi-Human Multi-Robot Teams", IEEE Transactions on Human-Machine Systems, Vol. 55, No. 1, pp. 23-36, February 2025. Paper Link, Video Link

- Wonse Jo*, Ruiqi Wang*, Go-Eum Cha, Su Sun, Revanth Senthilkumaran, Daniel Foti, and Byung-Cheol Min (* equal contribution), "MOCAS: A Multimodal Dataset for Objective Cognitive Workload Assessment on Simultaneous Tasks", IEEE Transactions on Affective Computing, Early Access, 2024. Paper Link, Video Link

- Ruiqi Wang*, Dezhong Zhao*, Arjun Gupte, and Byung-Cheol Min (* equal contribution), "Initial Task Assignment in Multi-Human Multi-Robot Teams: An Attention-enhanced Hierarchical Reinforcement Learning Approach", IEEE Robotics and Automation Letters, Vol. 9, No. 4, pp. 3451-3458, April 2024. Paper Link, Video Link

- Ruiqi Wang, Dezhong Zhao, and Byung-Cheol Min, "Initial Task Allocation for Multi-Human Multi-Robot Teams with Attention-based Deep Reinforcement Learning", 2023 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS 2023), Detroit, USA, October 1-5, 2023. Paper Link, Video Link

- Ahreum Lee, Wonse Jo, Shyam Sundar Kannan, and Byung-Cheol Min, "Investigating the Effect of Deictic Movements of a Multi-robot", International Journal of Human-Computer Interaction, Vol 37, No. 3, pp. 197-210, 2021. Paper Link, Video Link

- Tamzidul Mina, Shyam Sundar Kannan, Wonse Jo, and

Byung-Cheol Min, "Adaptive Workload Allocation for

Multi-human Multi-robot Teams for Independent and

Homogeneous Tasks", IEEE Access, Vol. 8, pp. 152697-152712,

2020. Paper Link, Video Link

Socially-Aware Robot Navigation (2021 -

Present)

Description: Socially-aware robot

navigation (SAN) represents a fundamental challenge in

human-robot interaction, where robots must navigate to their

goals while maintaining comfortable and socially appropriate

interactions with humans. This complex task requires robots to

understand and respect human spatial preferences and social

norms while avoiding collisions. While learning-based

approaches have shown promise compared to traditional

model-based methods, significant challenges remain in

capturing the full complexity of crowd dynamics. These include

the subtle interplay of human-human and human-robot

interactions, and how various environmental contexts influence

social behavior. The SMART Lab's research advances this field

by developing sophisticated algorithms that better encode and

interpret intricate social dynamics across diverse

environments. Through innovative deep learning techniques, we

enable robots to understand and adapt to human behavioral

patterns in different contexts. Our work aims to create

navigation systems that demonstrate unprecedented awareness of

social nuances, leading to more natural and acceptable robot

movement in human spaces.

Grant: NSF (IIS #1846221)

People: Ruiqi

Wang, Weizheng

Wang

Project Website: https://sites.google.com/view/san-fapl;

https://sites.google.com/view/san-navistar

Selected Publications:

- Weizheng Wang, Aniket Bera, and Byung-Cheol Min, "Hyper-SAMARL: Hypergraph-based Coordinated Task Allocation and Socially-aware Navigation for Multi-Robot Systems", IEEE International Conference on Robotics and Automation (ICRA), Atlanta, USA, 19-23 May, 2025. (Accepted) Paper Link, Video Link, Website Link

- Weizheng Wang, Chao Yu, Yu Wang, and Byung-Cheol Min, "Human-Robot Cooperative Distribution Coupling for Hamiltonian-Constrained Social Navigation", IEEE International Conference on Robotics and Automation (ICRA), Atlanta, USA, 19-23 May, 2025. (Accepted) Paper Link, Video Link, Website Link

- Weizheng Wang, Le Mao, Ruiqi Wang, and Byung-Cheol Min, "Multi-Robot Cooperative Socially-Aware Navigation using Multi-Agent Reinforcement Learning", International Conference on Robotics and Automation (ICRA), Yokohama, Japan, May 13-17, 2024. Paper Link, Video Link

- Weizheng Wang, Ruiqi Wang, Le Mao, and Byung-Cheol Min, "NaviSTAR: Benchmarking Socially Aware Robot Navigation with Hybrid Spatio-Temporal Graph Transformer and Active Learning", 2023 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS 2023), Detroit, USA, October 1-5, 2023. Paper Link, Video Link, GitHub Link

- Ruiqi Wang, Weizheng Wang, and Byung-Cheol Min, "Feedback-efficient Active Preference Learning for Socially Aware Robot Navigation", 2022 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS 2022), Kyoto, Japan, October 23-27, 2022. Paper Link, Video Link, GitHub Link

Affective Computing (2019 - Present)

Description: The estimation of human

affective states such as emotional states and cognitive

workloads for effective human-robot interaction has gained

increased attention. The emergence of new robotics middleware

such as ROS has also contributed to the growth of HRI research

that integrates affective computing with robotics systems. We

believe that human affective states play a significant role in

human-robot interaction, especially human-robot collaboration,

and we are conducting various research on affective computing,

from framework design to dataset design/creation and algorithm

development. For example, we recently developed a ROS-based

framework that enables the simultaneous monitoring of various

human physiological and behavioral data and robot conditions

for human-robot collaboration. We also developed and published

a ROS-friendly multimodal dataset comprising physiological

data measured using wearable devices and behavioral data

recorded using external devices. Currently, we are exploring

machine learning and deep learning-based methods (e.g., using

Transformer) for real-time prediction of human affective

states.

Grant: NSF (IIS #1846221)

People: Wonse

Jo, Go-Eum

Cha, Ruiqi

Wang, Revanth Krishna Senthilkumaran

Project Website: https://polytechnic.purdue.edu/ahmrs

Selected Publications:

- Jinjin Cai*, Ruiqi Wang*, Dezhong Zhao, Ziqin Yuan, Victoria McKenna, Aaron Friedman, Rachel Foot, Susan Storey, Ryan Boente, Sudip Vhaduri, and Byung-Cheol Min (*equal contribution), "Multimodal Audio-based Disease Prediction with Transformer-based Hierarchical Fusion Network", IEEE/ACM Transactions on Audio, Speech, and Language Processing, 2025. (In Press) Paper Link, Website Link, GitHub Link

- Ruiqi Wang*, Wonse Jo*, Dezhong Zhao, Weizheng Wang, Baijian Yang, Guohua Chen, and Byung-Cheol Min (* equal contribution), "Husformer: A Multi-Modal Transformer for Multi-Modal Human State Recognition", IEEE Transactions on Cognitive and Developmental Systems, Vol. 16, No. 4, pp. 1374-1390, August 2024. Paper Link, GitHub Link

- Wonse Jo*, Ruiqi Wang*, Go-Eum Cha, Su Sun, Revanth Senthilkumaran, Daniel Foti, and Byung-Cheol Min (* equal contribution), "MOCAS: A Multimodal Dataset for Objective Cognitive Workload Assessment on Simultaneous Tasks", IEEE Transactions on Affective Computing, Early Access, 2024. Paper Link, Video Link

- Go-Eum Cha, Wonse Jo, and Byung-Cheol Min, "Implications of Personality on Cognitive Workload, Affect, and Task Performance in Robot Remote Control", 2023 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS 2023), Detroit, USA, October 1-5, 2023. Paper Link, Video Link

- Go-Eum Cha and Byung-Cheol Min, "Correlation between Unconscious Mouse Actions and Human Cognitive Workload", 2022 ACM CHI Conference on Human Factors in Computing Systems - Late-Breaking Work, New Orleans, LA, USA, April 30–May 6, 2022. Paper Link, Video Link

Human-Delivery Robot Interaction (2019 -

2023)

Description: As delivery robots

become more capable and necessary for quick and economic

delivery of goods, there is increasing interest in using

robots for last-mile delivery. However, current research and

services involving delivery robots are still far from meeting

the growing demand in this area, let alone being fully

integrated into our lives. The SMART Lab investigates various

practical and theoretical topics in robot delivery, including

vehicle routing for drones, localization of a requested

delivery spot, and social interaction between package

recipients and delivery robots. To do this, we use

mathematical methods to solve optimization problems and

conduct experimental methods based on user studies. We expect

that this research will play a major role in enabling delivery

robots to deliver packages more intelligently and effectively,

like professional human couriers, and that it will improve

human-delivery robot interaction while increasing robot

autonomy.

Grant: Purdue University

People: Shyam Sundar Kannan, Ahreum Lee

Selected Publications:

- Shyam Sundar Kannan and Byung-Cheol Min, "Autonomous Drone Delivery to Your Door and Yard", 2022 International Conference on Unmanned Aircraft Systems (ICUAS), Dubrovnik, Croatia, June 21-24, 2022. Paper Link, Video Link

- Shyam Sundar Kannan and Byung-Cheol Min, "Investigation on Accepted Package Delivery Location: A User Study-based Approach", 2021 IEEE International Conference on Systems, Man, and Cybernetics (SMC), Virtual, Melbourne, Australia, 17-20 October, 2021. Paper Link

- Shyam Sundar Kannan, Ahreum Lee, and Byung-Cheol Min, "External Human-Machine Interface on Delivery Robots: Expression of Navigation Intent of the Robot", 2021 30th IEEE International Conference on Robot & Human Interactive Communication (RO-MAN), Virtual, Vancouver, Canada, 8-12 August, 2021. Paper Link, Video Link

- Patchara Kitjacharoenchai, Byung-Cheol Min, and Seokcheon Lee, "Two Echelon Vehicle Routing Problem with Drones in Last Mile Delivery", International Journal of Production Economics, Vol. 25, 2020. Paper Link

Assistive Technology and Robots for People who are Blind or Visually Impaired (2014 - 2018)

Description: The World Health Organization

(WHO) estimates that 285 million people in the world are

visually impaired, with 39 million being blind. While safe and

independent mobility is essential in modern life, traveling in

unfamiliar environments can be challenging and daunting for

people who are blind or visually impaired due to a lack of

appropriate navigation aid tools. To address this challenge,

the SMART Lab investigates practical and theoretical research

topics on human-machine interaction and human-robot

interaction in the context of assistive technology and

robotics. Our primary research goal is to empower people with

disabilities to safely and independently travel to and

navigate unfamiliar environments. To achieve this, we have

developed improved and appropriate navigation aid tools that

will enable visually impaired people to travel unfamiliar

environments safely and independently with minimal training

and effort. We have also introduced an indoor navigation

application for a blind user to request help based on

emergency and non-emergency situations.

Grants: Purdue University

People: Yeonju

Oh, Manoj

Penmetcha, Arabinda

Samantaray

Selected Publications:

- Yeonju Oh, Wei-Liang Kao, and Byung-Cheol Min, "Indoor Navigation Aid System Using No Positioning Technique for Visually Impaired People", HCI International 2017 - Poster Extended Abstract, Vancouver, Canada, 9-14 July, 2017. Paper Link, Video Link

- Manoj Penmetcha, Arabinda Samantaray, and Byung-Cheol Min, "SmartResponse: Emergency and Non-Emergency Response for Smartphone based Indoor Localization applications", HCI International 2017 - Poster Extended Abstract, Vancouver, Canada, 9-14 July, 2017. Paper Link

- Byung-Cheol Min, Suryansh Saxena, Aaron Steinfeld, and M. Bernardine Dias, “Incorporating Information from Trusted Sources to Enhance Urban Navigation for Blind Travelers", Robotics and Automation (ICRA), 2015 IEEE International Conference on, pp.4511-4518, Seattle, USA, May 26-30, 2015. (Paper Link)

- Byung-Cheol Min, Aaron Steinfeld, and M. Bernardine Dias, “How Would You Describe Assistive Robots to People Who are Blind or Low Vision?", Proceedings of the Tenth Annual ACM/IEEE International Conference on Human-Robot Interaction (HRI) Extended Abstracts, Portland, USA, Mar. 2-5, 2015. (Paper Link)

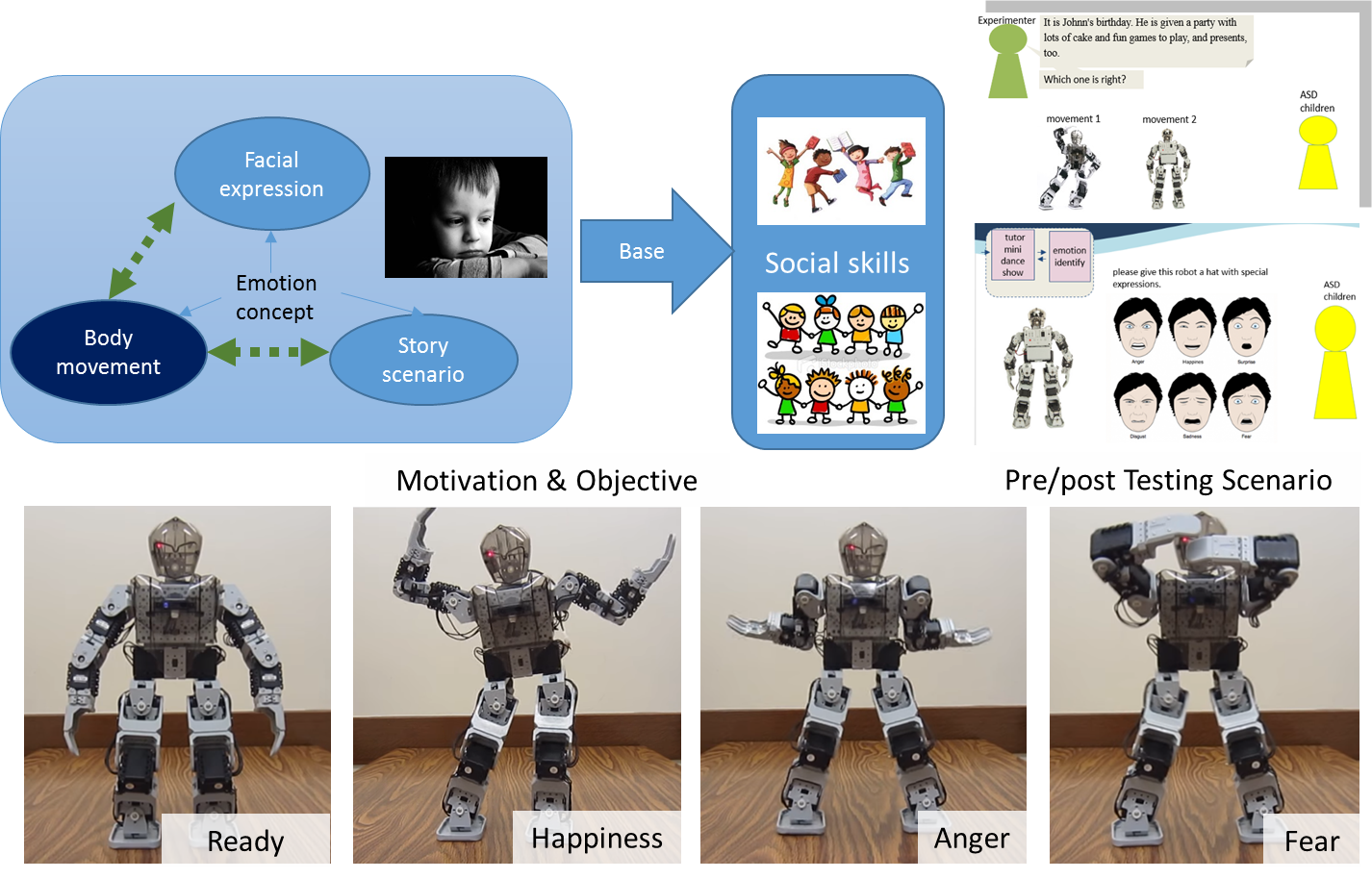

Assistive Technology and Robots for Children with Autism Spectrum Disorder (ASD) (2015 - 2017)

Description: Autism spectrum disorder

(ASD) is one of the most significant public health concerns in

the United States and globally. Children with ASD have

impaired ability in social interaction, social communication,

and imagination and often have poor verbal ability. Several

approaches have been used to help them, including the use of

humanoid robots as a new tool for teaching them. Robots can

offer simplified physical features and a controllable

environment that are preferred by autistic children, as well

as a human-like conversational environment suitable for

learning about emotions and social skills. The SMART Lab is

designing a set of robot body movements that express different

emotions and a robot-mediated instruction prototype to explore

the potential of robots to teach emotional concepts to

autistic children. We are also studying a technical

methodology that can be easily deployed in the daily

environment of children with ASD and teach language to them at

low cost, based on embedded devices and semantic information

that can be extended to a cyber-physical system in the future.

This method will provide verbal descriptions of objects and

adapt the level of descriptions to the child's learning

achievements.

Grants: Purdue University

People: Huanhuan Wang, Pai-Ying Hsiao,

Sangmi Shin

Selected Publications:

- Sangmi Shin, Byung-Cheol Min, Julia Rayz, and Eric T. Matson, "Semantic Knowledge-based Language Education Device for Children with Developmental Disabilities", IEEE Robotic Computing (IRC) 2017, Taichung, Taiwan, April 10-12, 2017. Download PDF

- Huanhuan Wang, Pai-Ying Hsiao, and Byung-Cheol Min, "Examine the Potential of Robots to Teach Autistic Children Emotional Concepts", The Eight International Conference on Social Robotics (ICSR), Kansas City, USA, Nov. 1-3, 2016. Download PDF